統計関連学会連合大会で発表した資料です。

使用したコード

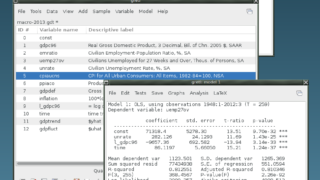

import pandas as pd

import numpy as np

import torch

from torch.utils.data import TensorDataset, DataLoader

import matplotlib.pyplot as plt

import torch.nn as nn

import torch.optim as optim

from sklearn.preprocessing import StandardScaler, OneHotEncoder

from sklearn.metrics import r2_score

import random

# ===== 再現性の確保(★) =====

SEED = 42

random.seed(SEED)

np.random.seed(SEED)

torch.manual_seed(SEED)

# Google Drive マウント

from google.colab import drive

drive.mount('/content/drive', force_remount=True)

# データ読み込み

I3 = pd.read_csv('/content/drive/MyDrive/LSTM/LSTMI3G.csv')

# 特徴量とターゲット定義

n_time = 2

n_features =1

# Corrected base_features to start from 1

base_features = [f'feature_{i+7}' for i in range(n_features)]

#base_features = ['feature_5','feature_7']

#base_features = ['feature_19'] # ← 同時点のi3とほぼ同じという仮定の列

target_col = 'i3' # 小文字に注意

# 都道府県ダミー作成

enc = OneHotEncoder()

pref_dummy = enc.fit_transform(I3[['pref']]).toarray()

pref_cols = [f'pref_{cat}' for cat in enc.categories_[0]]

I3[pref_cols] = pref_dummy

# 都道府県別にスケーリング・lag追加

scaled_dfs = []

feature_scalers = {}

target_scalers = {}

for pref in I3['pref'].unique():

df_pref = I3[I3['pref'] == pref].copy().sort_values(by='year').reset_index(drop=True)

# lag 変数作成(目的変数の自己ラグ)

for i in range(2): #n_timeを2に変更

df_pref[f'target_lag{i+1}'] = df_pref[target_col].shift(i+1)

lag_features = [f'target_lag{i+1}' for i in range(2)] #n_timeを2に変更

# lagが揃う行のみ残す

df_pref = df_pref.dropna(subset=lag_features).reset_index(drop=True)

# 特徴量にlagを追加

local_features = base_features + lag_features

# スケーリング

f_scaler = StandardScaler()

t_scaler = StandardScaler()

df_pref[local_features] = f_scaler.fit_transform(df_pref[local_features])

df_pref[[target_col]] = t_scaler.fit_transform(df_pref[[target_col]])

scaled_dfs.append(df_pref)

feature_scalers[pref] = f_scaler

target_scalers[pref] = t_scaler

# 全都道府県結合

df_sorted = pd.concat(scaled_dfs).sort_values(['pref', 'year']).reset_index(drop=True)

base_features = base_features + lag_features # 全体のbase_featuresにもlagを反映

# テンソル作成(★同時点予測に修正)

input_list, target_list = [], []

pref_list = []

year_list = []

for pref in df_sorted['pref'].unique():

df_pref = df_sorted[df_sorted['pref'] == pref].reset_index(drop=True)

# 都道府県ダミーは系列内で一定(1県=一定ベクトル)

pref_vector = df_pref[pref_cols].iloc[0].values

# ウィンドウの最後の時点を予測

# 例:n_time=7なら、i..i+6 の7行を使って、yは i+6行目(同時点)

for i in range(len(df_pref) - n_time + 1):

x_base = df_pref.iloc[i:i+n_time][base_features].values # 形状: (n_time, base_dim)

x_pref = np.tile(pref_vector, (n_time, 1)) # 形状: (n_time, n_prefs)

x = np.concatenate([x_base, x_pref], axis=1) # 形状: (n_time, n_features)

y = df_pref.iloc[i + n_time - 1][target_col] # ★同時点

y_year = df_pref.iloc[i + n_time - 1]['year'] # ★同時点の年

input_list.append(torch.tensor(x, dtype=torch.float32))

target_list.append(torch.tensor(y, dtype=torch.float32))

pref_list.append(pref)

year_list.append(y_year)

X = torch.stack(input_list) # 形状: (N, n_time, n_features)

y = torch.stack(target_list) # 形状: (N,)

meta_df = pd.DataFrame({'pref': pref_list, 'year': year_list})

n_features = X.shape[2] # LSTM入力次元

# 2021年予測データと学習データの分離(★同時点の年で分ける)

predict_mask = meta_df['year'] == 2021

X_test = X[predict_mask.values]

y_test = y[predict_mask.values]

meta_test = meta_df[predict_mask.values]

train_mask = meta_df['year'] < 2021

X_rest = X[train_mask.values]

y_rest = y[train_mask.values]

# train/val 分割

val_ratio = 0.3

n_total = len(X_rest)

n_val = int(n_total * val_ratio)

n_train = n_total - n_val

X_train, y_train = X_rest[:n_train], y_rest[:n_train]

X_val, y_val = X_rest[n_train:], y_rest[n_train:]

train_dataset = TensorDataset(X_train, y_train)

val_dataset = TensorDataset(X_val, y_val)

batch_size = 32

train_loader = DataLoader(train_dataset, batch_size=batch_size, shuffle=True) # 学習はshuffle=Trueを推奨

val_loader = DataLoader(val_dataset, batch_size=batch_size, shuffle=False)

# モデル定義

class LSTMNet(nn.Module):

def __init__(self):

super().__init__()

self.lstm = nn.LSTM(input_size=n_features, hidden_size=32, num_layers=1, batch_first=True)

self.fc = nn.Linear(32, 1)

def forward(self, x):

lstm_out, _ = self.lstm(x) # 形状: (batch, n_time, 32)

out = self.fc(lstm_out[:, -1, :]) # 最後の時点の隠れ状態のみ使用

return out

net = LSTMNet()

criterion = nn.MSELoss()

optimizer = optim.SGD(net.parameters(), lr=0.001)

num_epochs = 5000

history = np.zeros((0, 5))

# 学習ループ

for epoch in range(num_epochs):

net.train()

train_loss = 0.0

train_preds, train_targets = [], []

for x_batch, t_batch in train_loader:

y_pred = net(x_batch)

loss = criterion(y_pred, t_batch.view(-1, 1))

optimizer.zero_grad()

loss.backward()

optimizer.step()

train_loss += loss.item()

train_preds.append(y_pred.detach().numpy())

train_targets.append(t_batch.detach().numpy())

train_loss /= len(train_loader)

train_preds = np.concatenate(train_preds)

train_targets = np.concatenate(train_targets)

train_acc = r2_score(train_targets, train_preds)

net.eval()

val_loss = 0.0

val_preds, val_targets = [], []

with torch.no_grad():

for x_batch, t_batch in val_loader:

y_pred = net(x_batch)

loss = criterion(y_pred, t_batch.view(-1, 1))

val_loss += loss.item()

val_preds.append(y_pred.detach().numpy())

val_targets.append(t_batch.detach().numpy())

val_loss /= len(val_loader)

val_preds = np.concatenate(val_preds)

val_targets = np.concatenate(val_targets)

val_acc = r2_score(val_targets, val_preds)

if epoch % 100 == 0:

print(f'Epoch [{epoch}/{num_epochs}], loss: {train_loss:.5f}, acc: {train_acc:.5f}, val_loss: {val_loss:.5f}, val_acc: {val_acc:.5f}')

history = np.vstack((history, np.array([epoch, train_loss, train_acc, val_loss, val_acc])))

# 学習曲線

plt.plot(history[:, 0], history[:, 1], label='train loss')

plt.plot(history[:, 0], history[:, 3], label='val loss')

plt.xlabel('epoch'); plt.ylabel('loss'); plt.title('Loss'); plt.legend(); plt.show()

plt.plot(history[:, 0], history[:, 2], label='train R2')

plt.plot(history[:, 0], history[:, 4], label='val R2')

plt.xlabel('epoch'); plt.ylabel('R2'); plt.title('R2 Score'); plt.legend(); plt.show()

# 予測(2021)

net.eval()

with torch.no_grad():

y_test_pred = net(X_test)

# 逆変換(prefごとのScalerを使用)

y_test_pred_inv = []

y_test_true_inv = []

for i, row in meta_test.reset_index(drop=True).iterrows():

pref = row['pref']

t_scaler = target_scalers[pref]

y_pred_val = y_test_pred[i].cpu().numpy().reshape(-1, 1)

y_true_val = y_test[i].cpu().numpy().reshape(-1, 1)

y_test_pred_inv.append(t_scaler.inverse_transform(y_pred_val)[0][0])

y_test_true_inv.append(t_scaler.inverse_transform(y_true_val)[0][0])

y_test_pred_inv = np.array(y_test_pred_inv).reshape(-1, 1)

y_test_true_inv = np.array(y_test_true_inv).reshape(-1, 1)

# 出力データフレーム(誤差列も追加)

df_output = meta_test.reset_index(drop=True).copy()

df_output['label'] = y_test_true_inv.flatten()

df_output['output'] = y_test_pred_inv.flatten()

# 誤差(符号あり)・絶対誤差・二乗誤差

errors = df_output['output'] - df_output['label']

df_output['error'] = errors

df_output['abs_error'] = errors.abs()

df_output['sq_error'] = errors**2

# CSV出力

df_output.to_csv('/content/drive/MyDrive/MGDP/predict2021.csv', index=False)

# 散布図

plt.scatter(df_output['label'], df_output['output'])

plt.xlabel("Actual"); plt.ylabel("Predicted")

plt.title("Prediction vs Actual (Year = 2021, same-time)")

plt.grid()

plt.show()

print(df_output)スポンサーリンク

スポンサーリンク